Candidate screening strategies

With your requirements clearly defined, the next step is creating a focused list of candidate models. Rather than evaluating hundreds of available models, use strategic screening techniques to identify the most promising options.

The screening challenge

The embedding model landscape includes hundreds of options with new models released regularly. Evaluating every model would be impractical and time-consuming. Instead, use proven heuristics to quickly identify candidates worth detailed evaluation.

Strategic screening heuristics

1. Filter by modality support

This is your first and most critical filter. Models can only work with the data types they're designed for.

Examples:

- Text-only models (e.g., Snowflake Arctic): Cannot process images or audio

- Multimodal models (e.g., Cohere embed-english-v3.0): Support text + other modalities

- Vision models (e.g., ColPali): Designed specifically for document images

- Audio models: Specialized for speech and sound processing

No matter how excellent a model's performance, a text-only model cannot handle image retrieval, and a vision-only model cannot process plain text queries.

2. Prioritize existing organizational assets

Models your organization already uses offer significant advantages:

Already deployed models:

- Proven evaluation and approval processes

- Existing infrastructure and billing

- Known performance characteristics

- Established operational procedures

Provider ecosystem models: If you use services from providers like:

- Cohere, Mistral, OpenAI: Check their embedding model offerings

- AWS, Azure, Google Cloud: Explore their model catalogs

- Existing ML platforms: See what models are readily available

These models often have simplified procurement, billing, and integration processes.

3. Consider well-established models

Popular models are generally popular for good reasons:

Well-known models:

| Provider | Model Families |

|---|---|

| Alibaba | gte, Qwen |

| Cohere | embed |

gemini-embedding | |

| NVIDIA | NV-embed |

| OpenAI | text-embedding |

| Snowflake | arctic-embed |

Other model families:

- ColPali: Leading models for document image embeddings

- CLIP/SigLIP: Multimodal (text + image) embeddings

- nomic, bge, MiniLM: Well-regarded text retrieval models

Well-established models typically offer:

- Comprehensive documentation and examples

- Active community support and discussions

- Wide integration support across platforms

- Proven track records across use cases

4. Review benchmark leaders

Standard benchmarks help identify high-performing models, but use them strategically.

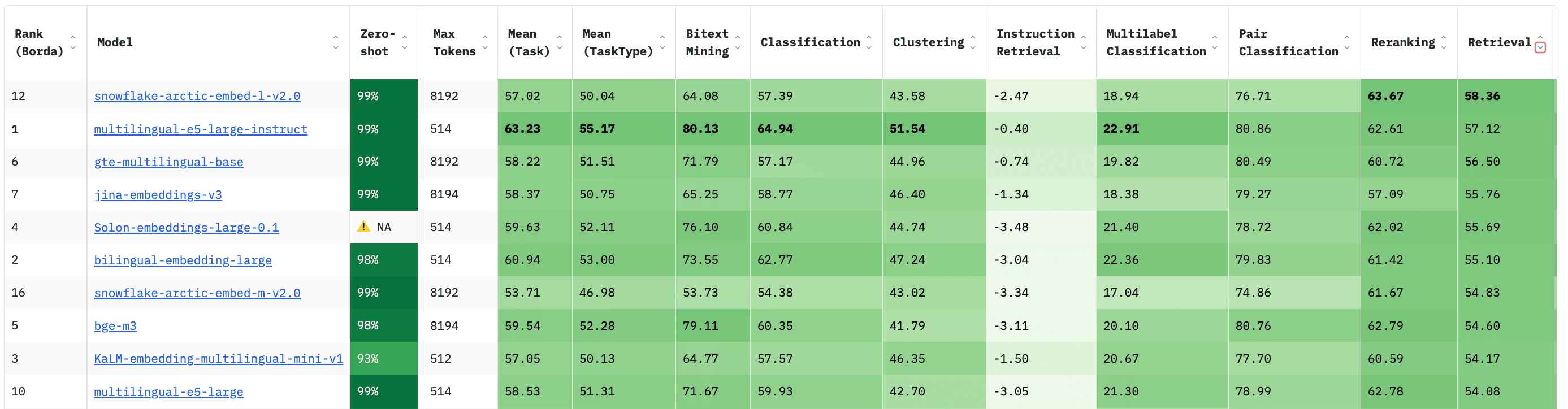

MTEB (Massive Text Embedding Benchmark) filtering:

This view shows models under 1B parameters, sorted by retrieval performance, revealing top performers like:

- Snowflake Arctic models

- Alibaba GTE models

- BAAI BGE models

- Microsoft multilingual-e5 models

- JinaAI embedding models

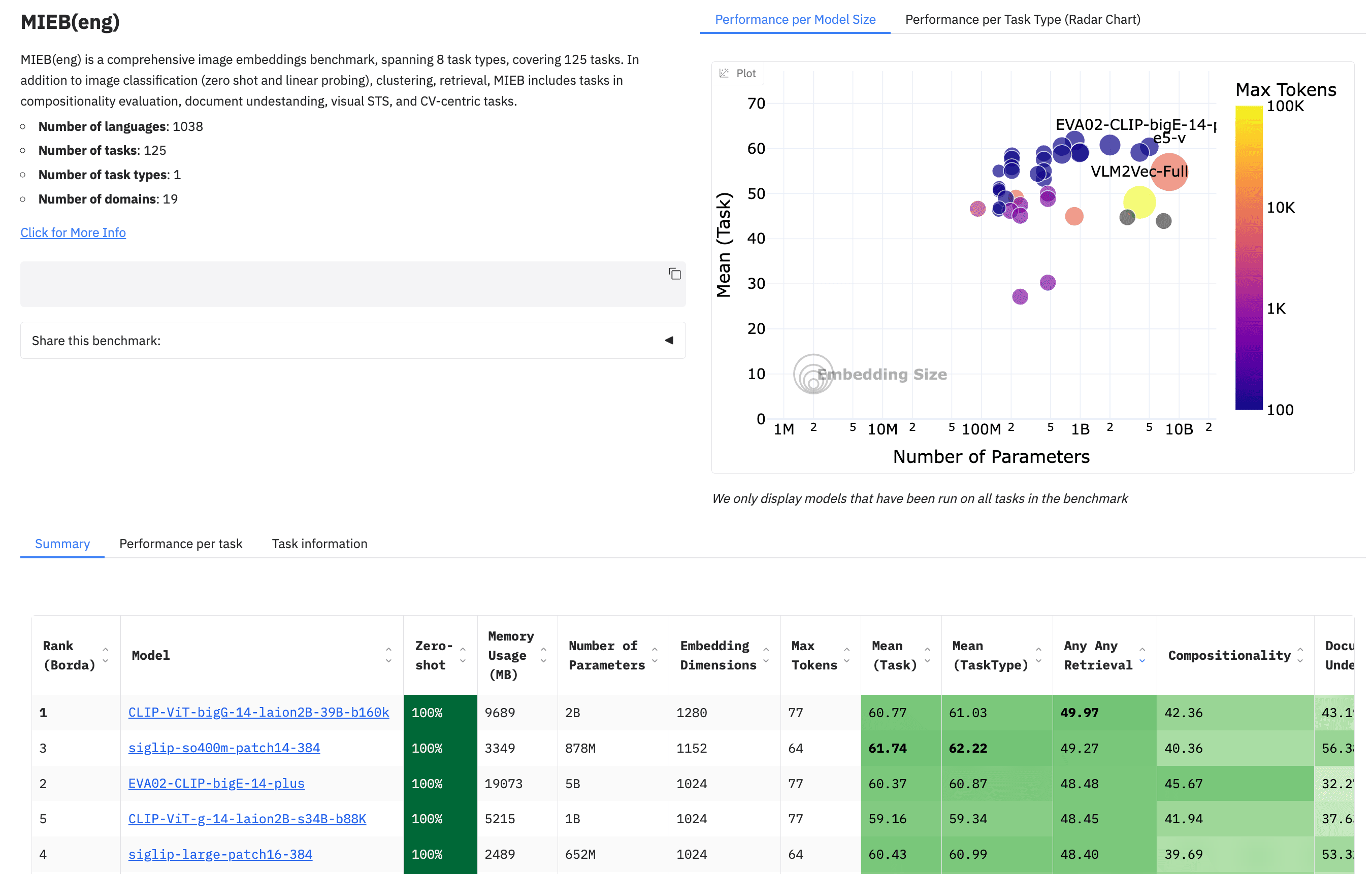

Specialized benchmarks:

For image retrieval tasks, use MIEB (Massive Image Embedding Benchmark):

Benchmark filtering tips:

- Filter by relevant metrics for your use case

- Consider model size constraints (parameters, memory)

- Look for models that balance performance with practical constraints

- Cross-reference with your modality and language requirements

Building your candidate list

Practical screening process

- Start with modality: Filter for models supporting your required data types

- Add existing assets: Include models already available in your organization

- Research popular options: Add 3-5 well-known models in your domain

- Check benchmarks: Include 2-3 benchmark leaders that meet your constraints

- Limit scope: Aim for 5-10 total candidates for detailed evaluation

Example screening results

For a text retrieval application requiring multilingual support with moderate latency requirements:

Candidate list might include:

- Existing:

text-embedding-ada-002(if already using OpenAI) - Popular:

snowflake-arctic-embed-m-v1.5(balanced performance/size) - Benchmark leader:

intfloat/multilingual-e5-large(MTEB top performer) - Provider option:

embed-multilingual-v3.0(if using Cohere) - Specialized:

gte-large-en-v1.5(strong English performance)

This focused list balances proven options, high performance, and practical constraints.

Avoiding common pitfalls

Don't:

- Include every model that looks interesting

- Focus only on benchmark scores without considering practical constraints

- Ignore licensing or deployment requirements during screening

- Select models that exceed your hardware or budget constraints

Do:

- Maintain a manageable candidate list (5-10 models)

- Consider the full context of your requirements

- Include at least one "safe" option (established, proven model)

- Document why each model was selected for evaluation

With your candidate list compiled, you're ready for detailed evaluation. We'll explore how to design comprehensive evaluations using your own data and requirements to make the final selection.